LWMap/Embedded Agency

08 Mar 2022 - 16 Dec 2022

- A review of the original post by Scott Garrabrant and Abram Demski

- This essay sets up a dichotomy between Alexi, a game-playing robot who is outside the system he interacts with, and hence inherently non-reflective, and Emmy, an embedded agent who can, must, and does reflect on her own intelligence.

- I'm not so sure the connection between embeddedness and reflectivity is as clear-cut as it is assumed to be here. In fact they are not the same thing. To call a mind or agent embedded, to me, suggests that it has a rich and complex sensorimotor relationship with its environment, not that it is capable of reasoning about itself.

Emmy thinks about how to think about how to win, Alexei only thinks about how to win.

- Apparently while Emmy may be embedded, she's not embedded in the real world but in a game world where "winning" is the main concern.

Real life is not like a video game. The differences largely come from the fact that Emmy is within the environment that she is trying to optimize.

- That...is not the only way in which real life is not like a video game! Or even the most important one!

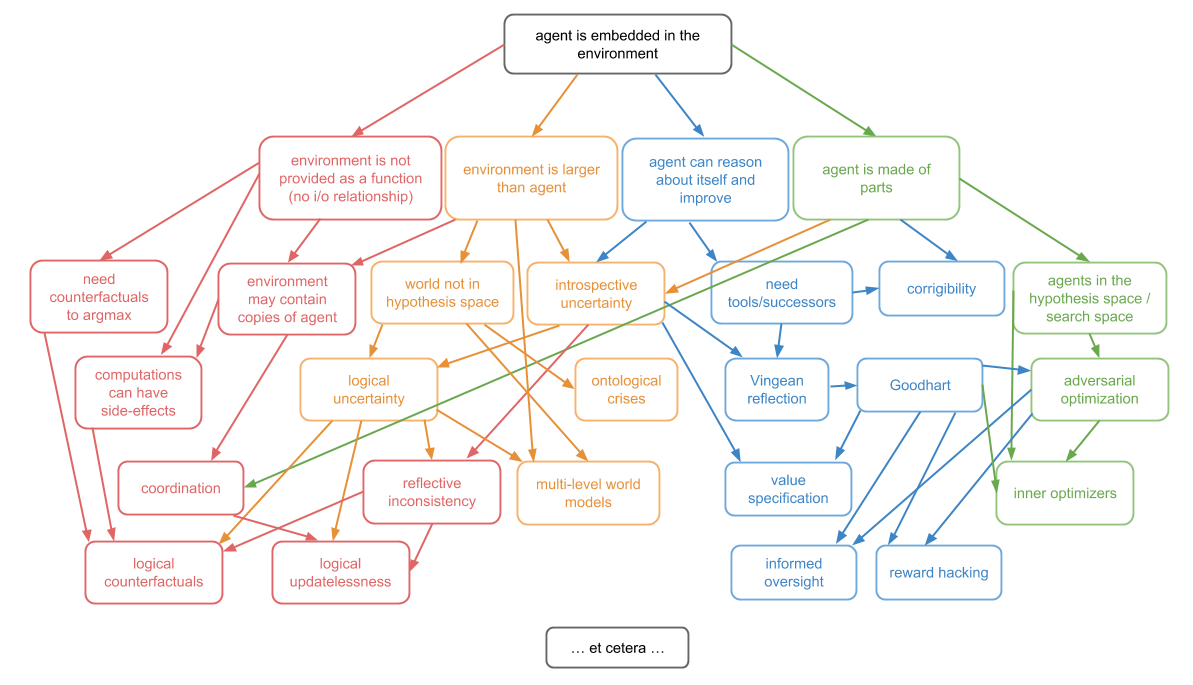

- Embeddedness, here, means an agent that is part of the world it models. From this spins a whole forest of new technical terms and theories for dealing with the resulting reflexivity; these include extensions to decision theory and mental representation to handle self-modeling, and problems of trust and alignment. These all look interesting, but there are too many of them.

- One curious thing: "embedded cognition" sounds similar to "embodied cognition" and the two terms are often linked (see 4E cognition). But they are not quite the same thing, and in this essay the body makes no appearance whatsoever. In fact they seem to lead in opposite directions; embeddedness seems to generate complexity; while embodiment suggests models of cognition that are actually simpler.

- It's instructive to compare this treatment of embeddedness with the similar work done under the rubric of situated action. One of the points of situated action was that traditional AI approaches to planning and action were too complex (in both the colloquial sense of "too complicated" and computer-science sense of "intractably unscalable"). The solution was to envision a radically simplified model of the mechanics of mind that relied on a closer interaction with the actual external world, rather than a laborious effort of generating a representation and then doing optimization and planning with it.

- Situated Action had some impact, leading to the newer subfields of 4E cognition but obviously did not completely succeed in its effort to revolutionize AI, because the kind of stuff here is exactly what it was trying to get rid of. From its standpoint, this is just adding layers of epicycles to something that already was collapsing under the weight of its own complexity and unworkability.

- That is to say, despite the focus of this essay on embededdness it does not do it in as radical a form as is required. The SitAct people put a lot of effort into thinking about how people really interact with their environment, for instance in how one composes and manages routine activity like cooking breakfast in the context of an actual kitchen, or how people actually manage to hold a conversation.

- Here, embededdness has been reduced to reflection, meaning that the agent can take itself as an object in its world, like any other. And to optimization, which further abstracts away the richness of the world and hides it behind some numbers (aka winning).

- Further reading:

- Brian Cantwell-Smith, Varieties of Self-Reference etc.

- 2nd-order cybernetics (von Foerester, etc, The purpose of second-order cybernetics, Glanville

- Foucault, The Order of Things

- Phil Agre & David Chapman, What are Plans For?, Abstract Reasoning as Emergent from Concrete Activity

- George Ainslie, Breakdown of Will